Your Interviewer Is a Machine.

Now What?

Your Interviewer Is a Machine. Now What?

How to stay human when algorithms decide if you're worth hiring

You did everything right. You did in-depth research on the company. You practiced your answers until you could say them in your sleep. You agonized over what to wear.

When you finally sat down at your laptop for the interview, you spoke to a software agent that was picking apart your word choices. Watching your face for micro-expressions. Timing your pauses. Matching your speech patterns against some model of "successful employees" — people you've never met, built from data you'll never see.

A recruiter received your final score, which determined whether you were worth hiring. Maybe they watched the video. Most likely, they didn't. Why bother? The machine helped them make the decision.

That's algorithmic hiring. Your first impression wasn't made on a human. It was made on an AI model, a machine.

What's Actually Being Measured

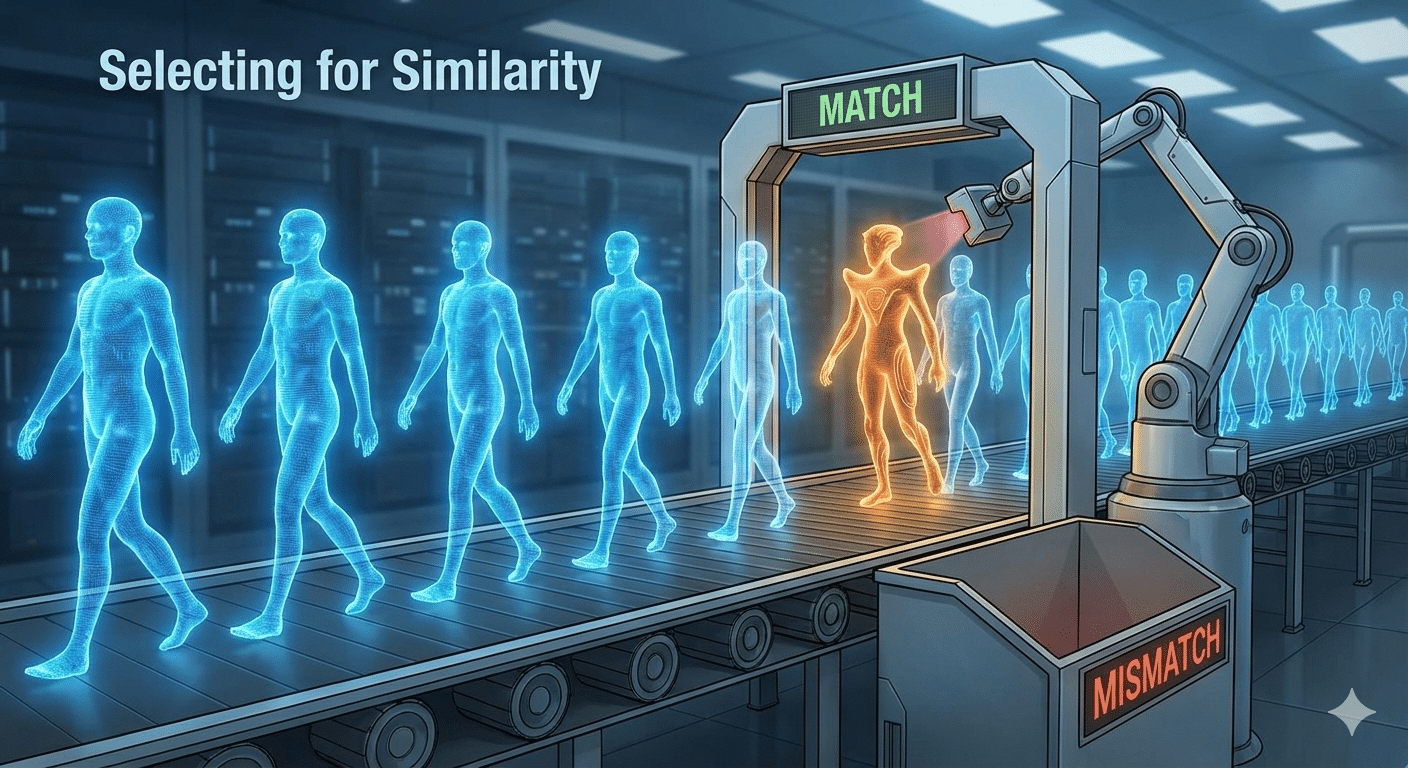

Let's be clear about what such interview tools optimize for: they match patterns to previous successes.

The AI takes in data from previous hires. It correlates it: speech cadence, keyword frequency, facial movement patterns, and response length. Then it scores new candidates on similarity to that model.

At first, this may sound like a reasonable, logical way of making decisions, but is it?

In reality, it is an automated way of asking whether this person resembles those who were successful hires before them.

The challenge is that your best future employee may not look, speak, or present like your current team. Innovation often comes from difference, yet the algorithm is designed to select for similarity

The Three Distortions

When machines do the hiring, several things change:

1. Trust Breakdown Before Day One

An interview is supposed to be a mutual exchange between people. You're evaluating them.

They're evaluating you.

Both parties build up a working relationship.

When one side is an AI, you never have that feeling of a mutually beneficial exchange.

You cannot read the room or tell if your joke landed. You can’t sense whether the “interviewer” is engaged or distracted, because there is no interviewer.

Candidates who pass through this process may expect a transactional relationship with the company. The organization signaled that meeting in person was not a priority, as they delegated to software.

Trust does not decline over time; it never starts beyond the offer letter.

2. Identity Compression

Every AI interview platform is based on a "success model." Candidates who want to succeed must fit into that model.

Quirks are minimized. Unusual career paths are reframed as more conventional narratives. An authentic conversation gives way to one that aligns with expectations.

Candidates quickly learn that being themselves is not the right answer; they must act in ways that align with the model's expectations.

By the time they are hired, they may have already learned to present a version of themselves that is not entirely authentic, only to learn that their true self may not be what the organization is seeking.

The process of identity adjustment does not begin with unclear role expectations; it begins when candidates feel compelled to perform a version of themselves just to enter the organization.

3. The Feedback Void

When a human makes a hiring decision, there is at least the possibility of human feedback. You can ask for clarification, and expect a human answer.

When a machine makes the decision, there is often no feedback—just a form email stating that the organization has decided to move forward with other candidates.

You do not know if it was your word choice, your expression, your response time, or some other factor the algorithm considered—something a human might not even notice.

Without this information, it is difficult to improve.

This lack of transparency can lead people to optimize based on guesswork: Should I smile more? Should I use more action verbs? Should I look at the camera in a particular way?

Instead of learning to become better professionals, candidates are learning to navigate and adapt to an invisible system.

The Employer's Blind Spot:

The reasons companies adopt AI tools are: volume, consistency, speed, and reduced bias.

But what’s missing is: “who fell through the AI interviewer cracks, who would have been found qualified by a human?”

For example, the candidate was nervous on camera but brilliant in conversation. The career-changer whose unusual background was signaling great adaptability, and not risk. The introvert whose quiet confidence was mistaken for "low enthusiasm" by an AI model that was trained on extroverts.

You'll never know what opportunities you missed, since the AI only shows you what it approved.

Now What? (For Candidates)

If you're navigating AI interviews, here are some things to remember:

Recognize the nature of the process: This isn't a human interview. It's a performance for an AI. If you can, research the AI and optimize your behavior accordingly.

Don't internalize the judgment. An AI rejection is not the same as a human assessing your worth. It's an algorithm - it doesn't know you, it only knows a version of you.

Seek human contact anyway. Network around the algorithm. Find people at the company. Get referred. Many organizations let referrals skip or abbreviate the AI screen. The back door still exists.

Protect your real self. Be mindful of maintaining your authentic self. While you may need to present a certain version of yourself to pass the screen, remember that this is not your full identity. Do not let the process erode your sense of self.

Now What? (For Employers)

If you're using AI hiring tools:

Consider auditing for what may be missed. Occasionally, have humans review candidates rejected by AI; you may find that some valuable individuals were left out with no good reason.

Communicate clearly with candidates about the process. Transparency builds trust. For example, let candidates know that the initial interview is AI-based and that they will speak with a real live human in later rounds can make a difference.

Consider the message you are sending. If the first interaction with a candidate is through an AI, what does that say about your culture? Is that the first impression you want to make?

The interview is the beginning of the employee experience and relationship. If that initial stage feels impersonal, it may affect retention later.

The Deeper Question

The question of what happens when your interviewer is a machine points to a deeper issue:

What kind of organization are we when we delegate human judgment to machines?

Hiring is about realizing human potential; it’s about finding qualities in people that data can’t capture.

AIs are not risk-takers; they are designed to optimize for situations they have encountered before.

While this may improve efficiency, it raises questions about the impact on humanity in the workplace.

Download the Culture Killers Field Guide by entering your email below.

Share This

If this resonates, share it with leaders who sense something is wrong but can't quite name it.

Use the diagnostic with your team. Start the conversation about which Culture Killers you're seeing and what you're going to do about them.

The patterns are predictable. The damage is reversible. But only if you act while the fractures are still invisible.